How Twitter Bots Are Shaping the Election

Between the first two presidential debates, a third of pro-Trump tweets and nearly a fifth of pro-Clinton tweets came from automated accounts.

By Douglas Guilbeault and Samuel Woolley, November 1, 2016

There is power in numbers, or so the saying goes. But statistics mean different things to different people. Take Donald Trump and Hillary Clinton, for instance.

Last week, the conservative political commentator Scottie Nell Hughes summed up the relative value of digits in a conversation with Anderson Cooper. “The only place that we’re hearing that Donald Trump honestly is losing is in the media or these polls,” she said. “You’re not seeing it with the crowd rallies, you’re not seeing it on social media—where Donald Trump is two to three times more popular than Hillary Clinton on every social media platform.”

The candidate himself has echoed this argument. During the first presidential debate, he touted a 30-million strong Facebook and Twitter following as a sign of mainstream popularity not reflected elsewhere.

The Clinton campaign, meanwhile, has stuck by traditional polls as evidence of her success.

All these numbers—social media followings, polls, or statistics—are only as viable as the tools used to get to them. Political campaigns worldwide now use bots, software developed to automatically do tasks online, as a means for gaming online polls and artificially inflating social-media traffic. Recent analysis by our research team at Oxford University reveals that more than a third of pro-Trump tweets and nearly a fifth of pro-Clinton tweets between the first and second debates came from automated accounts, which produced more than 1 million tweets in total. This data corroborates recent reports suggesting that both candidates’ social media followings are highly automated.

What does this mean for democracy?

* * *

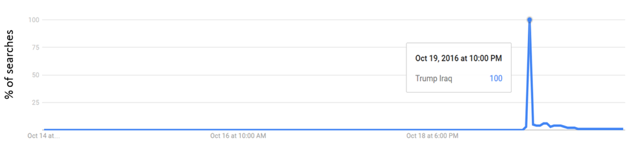

It is fashionable among futurists and technocratic utopians to wax poetic about robot butlers, self-driving cars, and the internet as a democratizing tool. And it is certainly true that social media and other digital tools can enhance the election process. Never before has an election been subject to so much real-time fact checking. During the final debate, Clinton asked viewers to Google the words “Trump” and “Iraq”—and they did. Google trends shows a huge surge in the number of searches containing these words, minutes after Clinton’s request.

(Google Trends)

At the same time, aspects of social-media infrastructure introduce major problems. Bots are easily programmable through the Twitter API (application programming interface) and can be deployed by just about anyone with preliminary coding knowledge. Marginal populations use bots to create an illusion of popularity around fringe issues or political candidates. For instance, the alt-right and white nationalists have used these automated proxies to artificially project hate speech and xenophobia on social-media platforms. Hoaxers from 4chan have been accused of using bots to game online polls in favor of Trump. And Trump often refers to online polls as evidence that he has “won” the debates.

Our team monitors political-bot activity around the world. We have data on politicians, government agencies, hacking collectives, and militaries using bots to disseminate lies, attack people, and cloud conversation. The widespread use of political bots solidifies polarization among citizens. Research has revealed social-media users’ tendency to engage with people like them, a concept known as homophily by social scientists. In the book Connected, Nicholas Christakis and James Fowler suggest that social media invites the emergence of homophily. Social media networks concretize what is seen in offline social networks, as well—birds of a feather flock together. This segregation often leads to citizens only consuming news that strengthens the ideology of them and their peers.

In this year’s presidential election, the size, strategy, and potential effects of social automation are unprecedented—never have we seen such an all-out bot war. In the final debate, Trump and Clinton readily condemned Russia for attempting to influence the election via cyber attacks, but neither candidate has mentioned the millions of bots that work to manipulate public opinion on their behalf. Our team has found bots in support of both Trump and Clinton that harness and augment echo chambers online. One pro-Trump bot, @amrightnow, has more than 33,000 followers and spams Twitter with anti-Clinton conspiracy theories. It generated 1,200 posts during the final debate. Its competitor, the recently spawned @loserDonldTrump, retweets all mentions of @realDonaldTrump that include the word loser—producing more than 2,000 tweets a day. These bots represent a tiny fraction of the millions of politicized software programs working to manipulate the democratic process behind the scenes.

Bots also silence people and groups who might otherwise have a stake in a conversation. At the same time they make some users seem more popular, they make others less likely to speak. This spiral of silence results in less discussion and diversity in politics. Moreover, bots used to attack journalists might cause them to stop reporting on important issues because they fear retribution and harassment.

* * *

The propagandistic power of bots is strengthened when few people know they exist. Homophily is particularly strong when people believe they have strength in numbers, and bots give the illusion of such strength. The more people know about bots, the more likely it is that citizens will begin reporting and removing bots, as well as using bots to boost their own voices.

Because bots have received little public attention, critics regularly frame bots in terms of a doomsday rhetoric that is lopsided toward their negative impact. But bots aren’t only used for manipulation of unsuspecting publics. Socially empowering bots are out there. For instance, @she_not_he is “a bot politely correcting Twitter users who misgender Caitlyn Jenner.” Silicon Valley has been developing bots in attempts to broadly enhance civic engagement: On Facebook, the The New York Times’ election bot promotes political participation.

In addition to social justice bots, there are also bot services that seek to enhance everyday life online. Digital artists build bots that generate humorous messages, such as @godtributes, which takes the tweets of others and reframes them as an impassioned praise of a nondescript god: “Self-education for the self-education god!” The artificial-intelligence company Luka is designing intelligent bots that “you teach and grow through conversation.” These digital automatons are marketed as a new form of online companion.

While many people are unsettled by the rise of bots, it’s important to remember that many of today’s most ubiquitous technologies were harnessed for political ends when they were first invented. In Europe, the printing press was almost immediately used to fuel the cultural war between the Protestants and the Catholics. Martin Luther, a seminal priest in the Protestant Reformation, was the first to take advantage of it, printing thousands of bibles translated into German. The proliferation of the Lutheran bible triggered a surge in German nationalism, which amplified conflict between the faiths. Generations later, after the printing press became more widespread and accessible, it provided a tool for minority and dissident voices fighting for social justice, such as the Suffrage and the Civil Rights movements.

* * *

Currently, there is almost no regulation on the use of bots in politics. The Federal Elections Commission has shown no evidence of even recognizing that bots exist. Bots that are used to trumpet hate speech, harass women journalists, and spread propaganda are also designed to conceal the identity of their creators. This layer of anonymity challenges the ability to hold people legally responsible. Moreover, it challenges notions of free speech—what happens when a bot, which might do things unforeseen by its maker, is the entity committing malicious acts?

Fortunately, methods for pinpointing the sources of bots are constantly improving. Continuing development efforts towards detecting—but also tracking and demobilizing—malevolent bots will allow governments to set legal precedents for fraudulent and hurtful automated abuses. It will also enhance the ability for social-media websites to detect and regulate bots, in what has become an ever-growing arms race against bot designers. While it’s possible for Facebook and Twitter to flag accounts suspected to be under bot control—bots tend to tweet several times a minute, and their comments are often less coherent than human users’—bot designers regularly tell us they have little trouble circumventing detection. Moreover, the debate on whether social-media websites should be allowed, or even have the desire, to censor posts has limited sites’ detection efforts.

Technology firms are currently investing in the new bot economy, which some say is the future of digital communication. Bots are being incorporated into services like Facebook Messenger, and platforms like Kik, Slack, and Periscope are claiming that bots will greatly enhance their user experience by acting as automated assistants that deal with a wide range of needs and requests—from checking the weather to booking plane tickets.

These developments foreshadow a future where bots will be intimately interwoven with everyday social interaction online. As bot awareness and usage grows, the greater pressure there will be for governments, nonprofit organizations, and research institutions to develop bots that protect and empower citizens—and don’t convince people to vote for particular presidential candidates.

...................................................................................................................................................................

No comments:

Post a Comment